The Data and AI-Ready Enterprise: Fundamental Principles of High-Performance Compute

Author: Tony Bishop, SVP Enterprise Platform and Solutions, Digital Realty

High-performance computing (HPC) is technology built to process massive amounts of data leveraging multiple nodes, or computer clusters, running in parallel to complete a task or series of tasks.

Most recently, at Digital Realty, we’ve seen examples of HPC that include:

- Weather forecasting

- Supercomputers that advance innovation in life sciences

- Genome mapping for medical breakthroughs

- Semiconductor testing

- Complex, multi-dimensional simulations

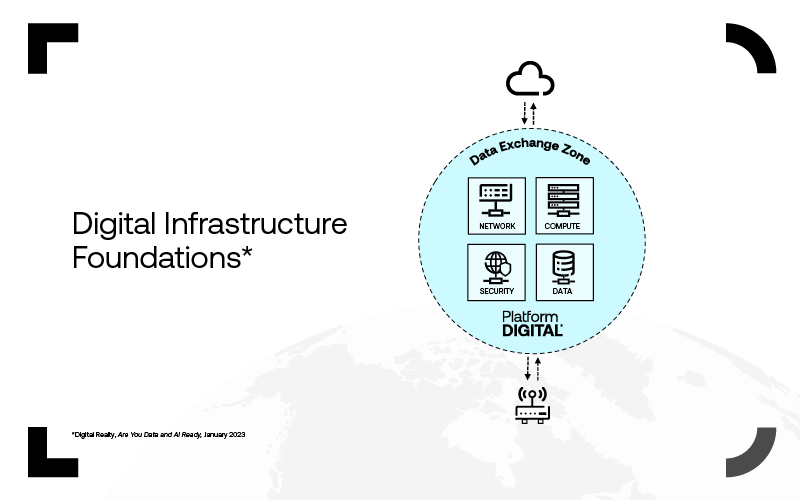

Because of the unique environment needed for HPC and artificial intelligence (AI), IT departments are implementing distributed infrastructures that remove Data Gravity barriers. Data Gravity simply means that data has a gravitational pull — as a data set grows, it attracts applications and services, creating a virtuous cycle of even more data creation. To support this distributed infrastructure requires four strategic digital foundations:

- Network

- Compute

- Data

- Security

In this blog, we’ll focus on how the compute foundation of HPC drives modern IT infrastructure strategy and development.

High-performance compute enables the transaction, processing, and analysis of data distributed across a global network. With IT leaders seeing their enterprises already producing exponentially larger datasets than ever before, organizations that rely on legacy architecture put their business at a distinct disadvantage.

Why high-performance compute and why now?

Advanced enterprises create data and AI-ready architectures designed for the increased load of data. They do this by putting data at the center of modern IT infrastructure, bringing the cloud and users to the data instead of the other way around. This accelerates processing, analysis, and transaction of data.

Companies that excel at this put themselves ahead of the competition. The growth of data is inevitable. IDC's Worldwide Datasphere report projects that data will increase by 573% each year by 2025.¹

All companies will notice the increase of data, but only a few will use it effectively to benefit their organizations.

Driven by expanded adoption of social and mobile technology, AI/machine learning (ML), and Internet of Things (IoT) data growth, modern enterprises demand a high-performance compute environment that can create, process, and exchange data.

Enterprise high-performance compute use cases

The growth of data, 573% each year by 2025, is projected to come from the exponential use of enterprise technology to service end users, grow business, and protect intellectual property. The use cases below pinpoint sources of data growth and application of high-performance compute power to manage that growth.

- Generative AI (GenAI): As the growth of chatbots increases in enterprise internal and external operations, data created from usage of this technology holds important, actionable insights for businesses prepared to receive and interpret the information.

- Supply chain management: Companies with sophisticated forecasting and predictive models for their supply chains will outperform competitors.

- Financial transactions: Analysts predict the financial management portion of enterprises will continue to be a source of data expansion.

- Region-specific user data processing: Data privacy concerns remain top of mind for customers, inspiring local, regional, and country-specific legislation on data sovereignty. Processing data at the point of collection requires localized compute power via regional HPC clusters.

These examples show how businesses can use a simple strategy to control and make the most of their exponential data growth.

What’s the best strategy for high-performance compute?

As enterprise IT leaders consider where to play in the HPC space and how to win, a few considerations on strategy pop up. Key to strategic development in HPC is converting from legacy IT architecture to modern HPC architecture.

Legacy architectures place applications, capacity, and data in the clouds, increasing latency and Data Gravity. Data and AI-ready architectures place data exchange zones between the clouds and the edge.

These data exchange zones empower enterprises to control and leverage data for agility and data-driven decision making. Just as important, data exchange zones allow companies to connect digital assets in the places where they do business across the globe, reducing exposure to data sovereignty risks while speeding up access to data for their team members.

Data exchange zones contain network, compute, security, and data as digital foundations that function locally. Efficient enterprises partner with colocation providers who know their needs and possess a strong fabric of connectivity, giving enterprises quick access to their most important service providers.

Figure 1: Digital Realty, Pervasive Datacenter Architecture (PDx)®, 2021

As we shift our focus to the compute quadrant of the data exchange zone, we see both the risks and rewards of a data and AI-ready infrastructure. Let’s take a look:

The risks of not implementing HPC include:

- Unnecessary capital expenditures

- Disparate workload functions negate infrastructure cost savings of hybrid and multi-cloud workload deployments

- Slow innovation and internal roadblocks to opportunities for advancement

The rewards of high-performance compute infrastructures:

- A system that puts the enterprise in full control of the rising tide of data creation

- Lower exposure to data sovereignty fines

- Early identification of industry / customer trends and the ability to capitalize on them quickly

Enterprises would do well to host applications on-premises or with a trusted colocation partner while optimizing workloads and scaling HPC solutions.

Here’s why:

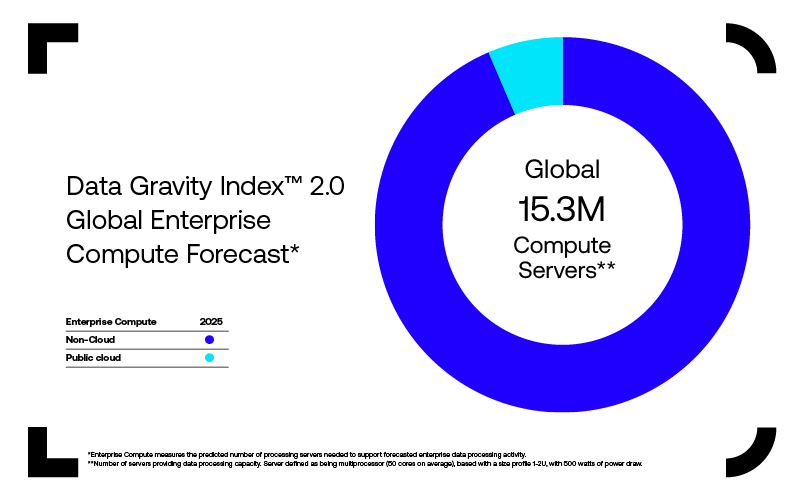

By 2025, global enterprise compute servers will total $15.3 million, with the vast majority being non-cloud servers, according to the Data Gravity Index™ 2.0.

Figure 2: Data Gravity Index™ 2.0, June 2023

Most enterprises will soon process their applications data on their own servers. The key to winning the race is knowing how to quickly scale up at a lower cost. Data centers help smart enterprises make the leap ahead.

What’s more, most data is created and used outside the public cloud, according to Digital Realty’s Data Gravity Index™ 2.0, revealing the need for a hybrid multi-cloud architecture to manage large data volume and scale.

Digital infrastructure foundation: Compute summary

With HPC compute, enterprises can scale processing of large amounts of data through HPC clusters and dependable, HPC-optimized interconnects. The result is a system to transact, process data, and enable fast decision-making.

A key design imperative for infrastructure design is to contain complexity at all points in the design process. The compute side of the data exchange zone can create complexity as data expands. Pre-integrated solutions offer tested, dependable systems on which to build a data and AI-ready digital infrastructure.

Compute is one of four digital infrastructure foundations. The others include network, to connect data, users, and applications locally; security, for protecting the entire infrastructure design; and data, to implement localization of data sets and secure data exchange.

Discover more about the four digital infrastructure foundations and how they can transform your high-performance computing journey by downloading our Data and AI Ready eBook.

¹IDC, Worldwide IDC Global DataSphere Forecast, 2023 2027: It's a Distributed, Diverse, and Dynamic (3D) DataSphere, April, 2023

Global (EN)

Global (EN)